Since AI systems (software systems with a machine learning component in it) are becoming more omnipresent in our daily lives, a lot of discussion is going on, on for example the ethical aspects of such systems. There are many examples of AI systems that take decisions with an adverse effect on human lives. Oftentimes, the system or people working with it are not able to provide clear explanations to the people affected by the decisions. Another example is the sustainability discussion (green AI): storing and processing huge amounts of data consumes huge amounts of energy. A third discussion is around privacy of people: if an AI system processes personal data, the algorithms can uncover patterns that lead to individual people, even if the personal data has been anonymized. Not too long ago, an expert group of the European Commission published guidelines on how to build trustworthy AI systems. They state: “While offering great opportunities, AI systems also give rise to certain risks that must be handled appropriately and proportionately. We now have an important window of opportunity to shape their development. We want to ensure that we can trust the sociotechnical environments in which they are embedded. … Trustworthiness is a prerequisite for people and societies to develop, deploy and use AI systems”. With my background in software engineering and quality assurance all these discussions around trust remind me of the debate we have been having since long time in software development: we should build software systems that we trust. This means we should be able to verify that the software systems fulfil both the functional requirements (what does the software system need to do?) and non-functional requirements (how shall the software system be?). The latter category is also called “quality requirements” and since long time (1991!) in software engineering we have a standard that defines the categories of quality requirements for software systems. I have used ISO9126 and its successor ISO25000 (SQuaRE) many times as a standard dictionary for defining quality requirements for a given software system. Although ISO25000 claimed in 2011 that “the characteristics defined … are relevant to all software products and computer systems”, by now ISO is working on an specific SQuaRE version for AI systems. While waiting for that update I took (grey) literature on quality of AI systems as a starting point to list a number of important extension/changes that are needed to the existing quality model. The challenge is that many papers and posts do not phrase there discussion in terms of “quality of AI systems”, see the examples above. Instead, they discuss one or more aspect that are important for AI systems or different for AI systems. The discussion is often phrased from a user perspective and not from a technical or engineering perspective. In this blog post I summarize my findings in the form of an extended ISO25000 quality model. This should help AI engineering projects to define quality requirements for their AI systems. The AI quality model covers all possibly relevant aspects for AI systems. It is up to the team to define which aspects are relevant for the current AI systems and how to measure or test them. The AI quality model also serves as a standard dictionary for discussing relevant properties of trustworthy AI systems, also with non-technical stakeholders.

EU Guidelines for Trustworthy AI

The before mentioned guidelines on how to build trustworthy AI systems list seven requirements that AI systems should meet, for which both technical and nontechnical methods should be used to implement them:

- human agency and oversight

- technical robustness and safety

- privacy and data governance

- transparency

- diversity

- non-discrimination and fairness

- environmental and societal well-being

- accountability

The report also contains a checklist for each of these requirements, where they are further specified in more concrete measures or questions. Kuwajima and Ishikawa (2019) translated these requirements into six quality characteristics that should be added to ISO25000: controllability, explainability, collaboration effectiveness, privacy, human autonomy risk mitigation and unfair bias risk mitigation. These additions clearly reflect the high impact of AI systems on human activities and human lives.

Testing Machine Learning Systems

In a previous blog post, I discussed hands-on techniques, tools and methods for testing AI systems. The structure of the blog post was based on the comprehensive literature survey by Zhang et al. (2020). According to Zhang et al. the trained ML model should be tested for the following properties: correctness, overfitting degree, fairness, interpretability, robustness, security, data privacy, and efficiency. Out of these eight properties, only security and efficiency are already part of the ISO25000 quality model. Fairness, interpretability and data privacy are already covered by the additions proposed by Kuwajima and Ishikawa (2019). So that leaves us to add model correctness, overfitting degree (i.e. the generalizability of the model) and model robustness. Since overfitting degree is very much related to model correctness, for the sake of simplicity we summarize that under one addition called “model correctness”.

Non-Functional Requirements for Machine Learning Systems

Habibullah et al. (2023) conducted interviews and a survey to understand how NFRs for ML systems are perceived among practitioners from both industry and academia. In their survey they used a list of 35 important NFRs for ML systems collected from interviews with 10 practitioners. Out of this list of 35 NFRs, 20 are included in ISO 25000, and thus 15 are not:

- two NFRs on model performance: accuracy, correctness

- three NFRs on fairness: bias, ethics, fairness

- five NFRs on reproducibility: consistency, repeatability, retrainability, reproducibility, traceability

- four NFRs on explainability: explainability, interpretability, justifiability. transparency

- privacy

Compared to the previous two lists, only the category of “reproducibility” is new, so we propose to add this. For the sake of completeness we include the definitions from Habibullah et al. (2023):

| Consistency | A series of measurements of the same project carried out by different raters using the same method should produce similar results |

| Repeatability | The variation in measurements taken by a single instrument or person under the same conditions |

| Retrainability | The ability to re-run the process that generated the previously selected model on a new training set of data |

| Reproducibility | One can repeatedly run your algorithm on certain datasets and obtain the same (or similar) results |

| Traceability | The ability to trace work items across the development lifecycle |

Other Views on Trustworthy AI Systems

The following papers and posts also discuss the quality of AI systems, but did not lead to adding quality characteristics to ISO25000.

- Ericsson (2020) considers explainability, safety and verifiability as the main ingredients for trustworthy AI systems and explain how they design and build such systems.

- Fujii et al. (2020) take a broader quality assurance perspective than only product quality, including also development process and customer expectations. They examined four popular domains in which ML-based systems are used to discuss the required quality characteristics, and the quality assurance viewpoint for each domain. That does lead to some domain-specific quality sub-characteristics like naturalness and smoothness for artefacts generated by generative systems (GANs).

- Habibullah et al.(2022) identified seven clusters of non-functional requirements for machine learning: 1) functional correctness; 2) understanding the internal decisions or results of applying ML (e.g., transparency, explainability). 3) ethical aspects of ML systems, such as fairness and bias; 4) performance (e.g., speed) 5) tailoring and adjustment to different environments (e.g., flexibility, adaptability); 6) privacy and security. 7) miscellaneous.

- Siebert et al. (2021) describe present how to systematically construct quality models for ML systems based on an industrial use case. They present “a meta-model for specifying quality models for ML systems, reference elements regarding relevant views, entities, quality properties, and measures for ML systems based on existing research, an example instantiation of a quality model for a concrete industrial use case, and lessons learned from applying the construction process”. They found “that it is crucial to follow a systematic process in order to come up with measurable quality properties that can be evaluated in practice”.

- Gezici & Tarhan (2022a) carried out a systematic literature review from 1988 to 2020 on quality challenges, attributes, and practices in the context of software quality for AI-based software. They conclude that “there is a gap in the relation of ISO25010’s quality attributes with AI-based software quality characteristics. Therefore, while measuring the quality of AI-based software, considering AI-specific quality attributes is required.”. In another study (2022b) they conducted a second systematic literature review to understand and define quality attributes (QAs) of AI-based software. They not only to define and interpret the quality attributes in ISO25010, but also define new quality attributes specific to AI-based software. Note that some of the new quality attributes they propose (trust, safety) are in fact already present in ISO25010’s quality in use model (see Figure 2).

- Poth et al. (2020) present a quality assurance approach for AI systems. Their approach also involves questionnaires to help AI engineers to systematically check weaknesses of the ML components of their product or service. They mapped each question in the questionnaire to one of the high-level quality characteristics of ISO25010.

- Nakamichi et al. (2020) propose a requirements-driven method to determine the quality characteristics of AI systems. From previous work they extracted 34 quality characteristics that should be guaranteed in AI systems. Out of these, 18 characteristics are ML-specific, while the others are for enterprise software systems. They also map the characteristics to ISO25010.

- Kästner (2022) includes a chapter on quality attributes of ML components in his book on ML in production. This chapter discusses attributes of models, algorithms and other ML components. The chapter also includes sections on trade-offs between quality attributes and negotiating model quality requirements. The quality attributes Kästner describes do not lead to additions to ISO25000, but the list does lead to an extension of the ones we already included. For model performance, one should also consider model calibration (the confidence scores the model produces should reflect actual probabilities that the prediction is correct) if applicable. And for reproducibility, Kästner uses the “observability of the machine-learning pipeline” as a possible quality attribute. It is a possible way of implementing the traceability from the list of Habibullah et al. (2023).

Quality Model for Trustworthy AI Systems

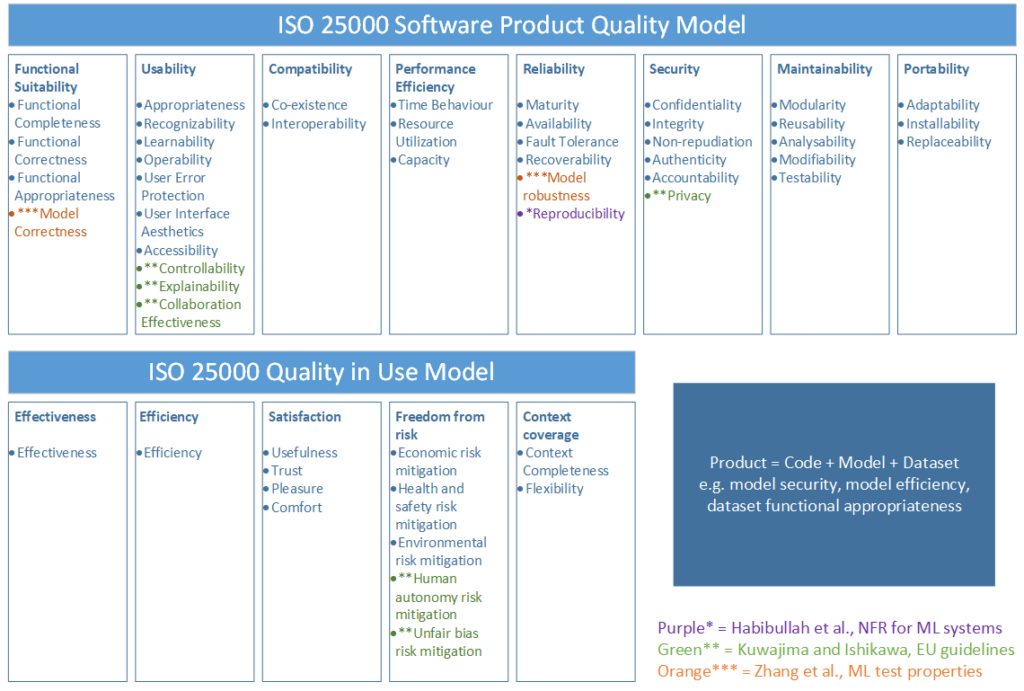

Collecting the proposed additions to the ISO25000 quality model, Figure 1 presents the extended AI Quality Model. Next to specific characteristics that should be added (in purple, green and orange), each of the characteristics should also be considered not only for software, but also for datasets and models, since these are the three types of artefacts that constitute an AI system (Fujii et al., 2020; Habibullah et al., 2022).

Figure 1. Extension of ISO25000 (SQuaRE) Quality Model for AI Systems, v2 march 2023

The proposed additions naturally relate to the difference between AI systems and traditional rule-based software systems:

- For AI systems we do not program the rules ourselves, but instead we train machine learning models to learn the rules from data

- Model correctness: we want the model to learn the correct rules (with correct confidence scores where applicable), also for unseen data

- Reproducibility: the learning process and the learned rules should be reproducible

- Model robustness: we want the model to be resilient against perturbed inputs, such as adversarial attacks

- The datasets that are used for training machine learning models are potentially huge and thus difficult to check by hand

- Privacy: the dataset should be free from personal data; furthermore the model should not uncover hidden patterns in the dataset that violate privacy properties

- Unfair bias risk mitigation: the dataset should be a good representation of the real population, such that the rules that the model learns from the data are not unfair to or biased against a certain group of people

- The rules that AI systems learn from example data are used in workflows to automate (parts of) human decision making

- Collaboration effectiveness: the AI system should help, not hinder the user in executing his/her task

- Explainability: it should be clear to the user on which grounds the AI system takes a certain decisions or comes up with a certain prediction (related terms: interpretability and transparency)

- Controllability: the user should be able to control the outcome or decision of the AI system if needed

- Human autonomy risk mitigation: the user should not feel threatened in his/her autonomy by letting the AI system take over tasks or decisions that were earlier executed by humans

Most of these properties can be checked and tested for at design or development time. However, testing machine learning systems is never complete and should always involve run-time monitoring (Zhang et al., 2020). This means the AI system should also include run-time checks for e.g. unfair bias, privacy violations, adversarial attacks, model degeneration. Another important mechanism to uphold quality is a human feedback loop for the automated decisions. This is the only way to know if in the long term the users of the AI system are still satisfied with the support the system offers and with the way in which it offers this support.

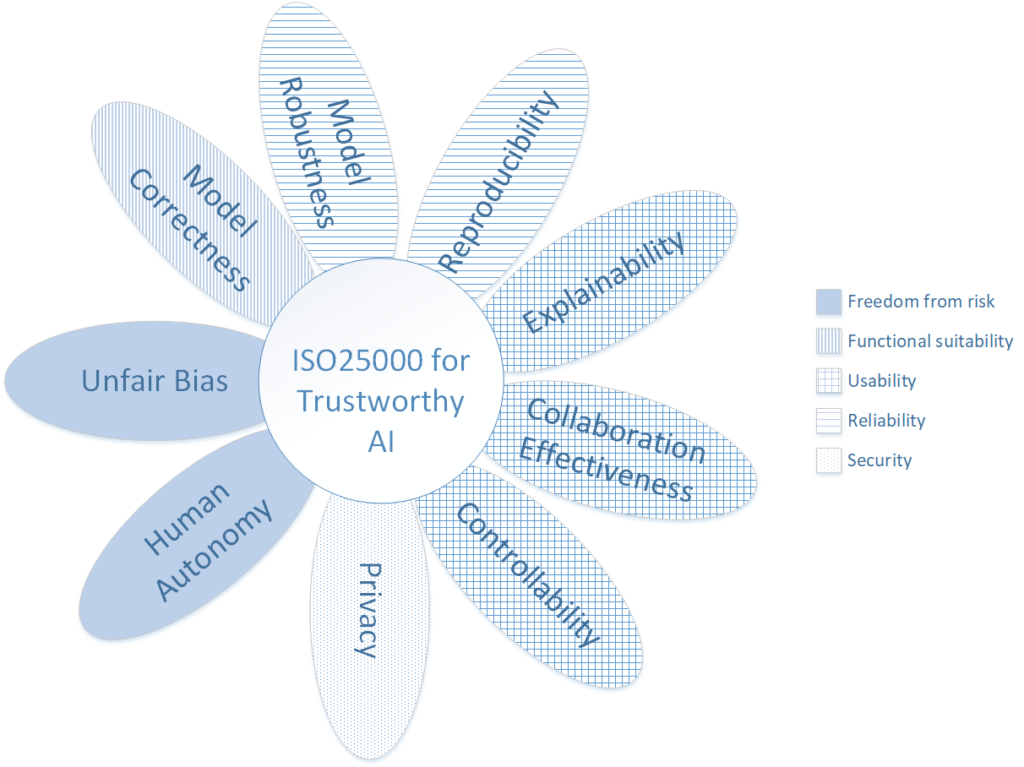

Figure 2. Extension of ISO25000 (SQuaRE) Quality Model for AI Systems – list of additional sub-characteristics, v2 march 2023

Conclusion

In this post I have formulated nine quality characteristics for AI systems that are missing in the ISO25010 System and software quality models. These nine quality characteristics (see Figure 2) summarize all important aspects of AI systems that are discussed in literature. They emphasize the difference between AI systems and rule-based software systems. Once we stop programming the rules ourselves we should take extra care of any adverse effect this can have on the end users of our system. But on the other hand, we should also not forget the traditional software quality characteristics that are already present in ISO25010. Together (see Figure 1) they form a basic dictionary to specify the quality properties of trustworthy AI systems. In future posts I will present tools, techniques and frameworks for ensuring your AI system fulfills these quality properties.

References

Ericsson (2020). Trustworthy AI: explainability, safety and verifiability.

Fujii, G., Hamada, K., Ishikawa, F., Masuda, S., Matsuya, M., Myojin, T., … & Ujita, Y. (2020). Guidelines for Quality Assurance of Machine Learning-Based Artificial Intelligence. International Journal of Software Engineering and Knowledge Engineering, 30(11n12), 1589-1606.

Gezici, B., & Tarhan, A. K. (2022). Systematic literature review on software quality for AI-based software. Empirical Software Engineering, 27(3), 1-65.

Habibullah, K. M., Gay, G., & Horkoff, J. (2022). Non-Functional Requirements for Machine Learning: An Exploration of System Scope and Interest. arXiv preprint arXiv:2203.11063.

Habibullah, K.M., Gay, G. & Horkoff, J. (2023). Non-functional requirements for machine learning: understanding current use and challenges among practitioners. Requirements Eng.

Kästner, C. (2022). Machine Learning in Production: From Models to Products.

Kuwajima, H., & Ishikawa, F. (2019, October). Adapting SQuaRE for quality assessment of artificial intelligence systems. In 2019 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW) (pp. 13-18). IEEE.

Nakamichi, K., Ohashi, K., Namba, I., Yamamoto, R., Aoyama, M., Joeckel, L., … & Heidrich, J. (2020, August). Requirements-driven method to determine quality characteristics and measurements for machine learning software and its evaluation. In 2020 IEEE 28th International Requirements Engineering Conference (RE) (pp. 260-270). IEEE.

Poth, A., Meyer, B., Schlicht, P., & Riel, A. (2020, December). Quality Assurance for Machine Learning–an approach to function and system safeguarding. In 2020 IEEE 20th International Conference on Software Quality, Reliability and Security (QRS) (pp. 22-29). IEEE.

Siebert, J., Joeckel, L., Heidrich, J., Trendowicz, A., Nakamichi, K., Ohashi, K., … & Aoyama, M. (2021). Construction of a quality model for machine learning systems. Software Quality Journal, 1-29.

Zhang, J. M., Harman, M., Ma, L., & Liu, Y. (2020). Machine learning testing: Survey, landscapes and horizons. IEEE Transactions on Software Engineering.

Vind ik leuk

Vind ik leuk

Over Petra Heck

Petra werkt sinds 2002 in de ICT, begonnen als software engineer, daarna kwaliteitsconsultant en nu docent Software Engineering. Petra is gepromoveerd (kwaliteit van agile requirements) en doet sinds februari 2019 onderzoek naar Applied Data Science en Software Engineering. Petra geeft regelmatig lezingen en is auteur van diverse publicaties waaronder het boek "Succes met de requirements".